Abstract

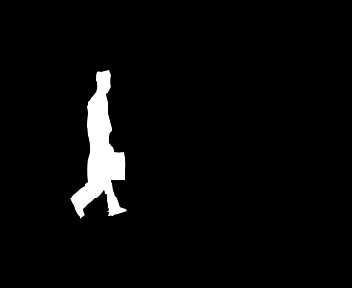

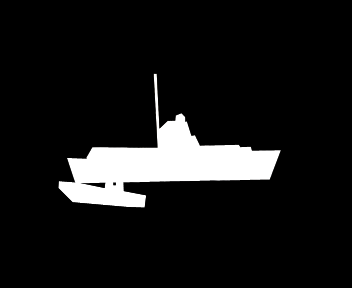

A compressed domain video saliency detection algorithm, which employs global and local spatiotemporal (GLST) features, is proposed in this work. We first conduct partial decoding of a compressed video bitstream to obtain motion vectors and DCT coefficients, from which GLST features are extracted. More specifically, we extract the spatial features of rarity, compactness, and center prior from DC coefficients by investigating the global color distribution in a frame. We also extract the spatial feature of texture contrast from AC coefficients to identify regions, whose local textures are distinct from those of neighboring regions. Moreover, we use the temporal features of motion intensity and motion contrast to detect visually important motions. Then, we generate spatial and temporal saliency maps, respectively, by linearly combining the spatial features and the temporal features. Finally, we fuse the two saliency maps into a spatiotemporal saliency map adaptively by comparing the robustness of the spatial features with that of the temporal features. Experimental results demonstrate that the proposed algorithm provides excellent saliency detection performance, while requiring very low complexity and thus performing the detection in real-time.

Comparison with the Conventional Algorithms

Rhinoceros

Bird1

Bird2

Horse

Fox

Sunflower

Ski

Board

Flight

Wildcat

Comparative Video Sequences

Reference

[1] NTT dataset, http://www.brl.ntt.co.jp/people/akisato/saliency3.html. [2] MPEG database, http://media.xiph.org/video/derf/. [3] MCL dataset, http://mcl.korea.ac.kr/projects/MCLDataset/. [4] J. Harel, C. Koch, and P. Perona, "Graph-based visual saliency," in Proc. Adv. Neural Inf. Process. Syst., 2006, pp. 545-552. [5] J.-S. Kim, J.-Y. Sim, and C.-S. Kim, “Multiscale saliency detection using random walk with restart,” IEEE Trans. Circuits Syst. Video Technol., vol. 24, no. 2, pp. 198–210, Feb. 2014. [6] B. Schauerte and R. Stiefelhagen, “Quaternion-based spectral salinecy detection for eye fixation prediction,” in Proc. European Conf. Comput. Vis., Oct. 2012, pp. 116–129. [7] Y. Li, Y. Zhou, L. Xu, X. Yang, and J. Yang, “Incremental sparse saliency detection,” in Proc. IEEE ICIP, Nov. 2009, pp. 3093–3096. [8] Y. Li, Y. Zhou, J. Yan, and J. Yang, “Visual saliency based on conditional entropy,” in Proc. Asian Conf. Comput. Vis., Sep. 2009, pp. 246–257. [9] H. J. Seo and P. Milanfar, “Static and space-time visual saliency detection by self-resemblance,” J. Vision, vol. 9, no. 12, pp. 1–27, Nov. 2009. [10] X. Hou and L. Zhang, “Saliency detection: A spectral residual approach,” in Proc. IEEE CVPR, Jun. 2007, pp. 1–8. [11] R. Achanta, S. Hemami, F. Estrada, and S. Susstrunk, “Frequency-tuned salient region detection,” in Proc. IEEE CVPR, Jun. 2009, pp. 1597–1604. [12] C. Yang, L. Zhang, H. Lu, X. Ruan, and M.-H. Yang, “Saliency detection via graph-based manifold ranking,” in Proc. IEEE CVPR, Jun. 2013, pp. 3166–3173. [13] M.-M. Cheng, G.-X. Zhang, N. Mitra, X. Huang, and S.-M. Hu, “Global contrast based salient region detection,” in Proc. IEEE CVPR, Jun. 2011, pp. 409–416. [14] W. Zhu, S. Liang, Y. Wei, and J. Sun, “Saliency optimization from robust background detection,” in Proc. IEEE CVPR, Jun. 2014, pp. 2814–2821. [15] F. Perazzi, P. Krahenbuhl, Y. Pritch, and A. Hornung, “Saliency filters: Contrast based filtering for salient region detection,” in Proc. IEEE CVPR, Jun. 2012, pp. 733–740. [16] Y. Fang, W. Lin, Z. Chen, C.-M. Tsai, and C.-W. Lin, “A video saliency detection model in compressed domain,” IEEE Trans. Circuits Syst. Video Technol., vol. 24, no. 1, pp. 27–38, Jan. 2014.